INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

The interview checklist: hiring CRO expertise

Let’s Spot the Quick Wins

Thanks! Your CRO Snapshot Is on the Way

Hire Smart

Looking to hire a CRO agency or consultant? Don't make a decision without the right information. Arm yourself with seven crucial questions that will help you separate the pros from the pretenders.

This concise checklist offers expert tips on evaluating potential partners, avoiding common pitfalls, and ensuring you choose a CRO team that can truly deliver results. Whether you're new to CRO or looking to switch providers, this checklist is your first step towards success. While on the search for CRO expertise, make sure you don't miss out on our post on 10 Red Flags When Choosing Your CRO Agency.

In this podcast you’ll learn:

- What companies can and cannot benefit from CRO.

- The importance of a cultural shift in your CRO strategy.

- Why CRO expertise is critical for running a successful program.

- Customer engagement.

- Effective forecasting in optimizing your conversion rates.

{{download}}

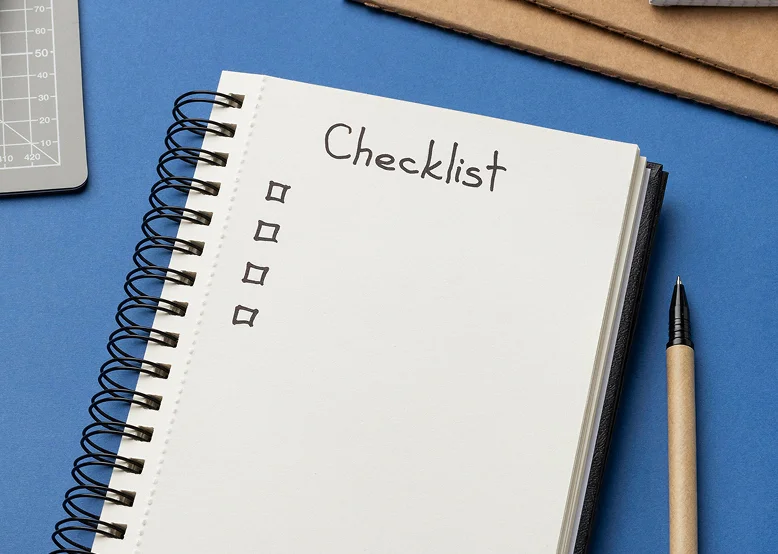

The Debate on Interaction Effects

Many experts argue that interaction effects can significantly skew your data, making it hard to draw accurate conclusions. They worry that multiple tests running simultaneously can create a mess of cross-pollinated results, leading to false positives and increased variance. This is especially concerning for low-traffic sites that need clear, actionable data.

Questions that would be useful to ask:

- How do you estimate the potential ROI for your CRO projects? Keep in mind that any agency or consultant that is projecting annualized numbers for results or revenue because this is not an accurate way to represent CRO success. Too many variables can influence annualized projections.

- How does your team stay updated with the latest CRO tools and strategies? This question can help you make sure the team actually specializes in CRO and isn’t trying to be a jack-of-all-trades.

- Can you walk me through your process for planning and conducting a test? Make sure this process includes pre-test calculations and tons of research. If it doesn’t, that’s a red flag!

- Can you provide an example of a time where you were unable to meet a client’s expectations? No matter how hard an agency tries, sometimes you might fall short. If an agency or consultant is telling you that they always deliver, they might not be providing an accurate picture of their experience, or they may be lacking experience. Make sure your potential agency or consultant is not proposing only testing one thing at a time or a lot of small tests, if so they are using outdated methodologies.

- What methodologies do you currently employ in your CRO tests? Make sure your potential agency or consultant is not proposing only testing one thing at a time or a lot of small tests, if so they are using outdated methodologies.

- Talk to me about the difference/preference on bayesian vs. frequentist and one-tailed vs. two-tailed testing. If they can’t speak to the differences or have no idea about what you’re asking about, then they don’t have the depth of knowledge needed to be a true CRO partner for your business. You also want to make sure your agency or consultant has opinions. If they are just willing to say “yes” and never push back against a client, you will be unlikely to see significant results from your CRO efforts

- What specific benefits have your clients seen from using AI in your projects? If they are overselling you on AI, run. While AI can be useful for some aspects of digital marketing, it should not be the leading focus of your CRO strategy.

Together, they share actionable insights and discuss the methods they use to continuously elevate their campaigns. Don’t miss this opportunity to gain cutting-edge strategies from the experts at the forefront of data-driven marketing.

What is a hypothesis?

A hypothesis is a statement about a specific research question - and the key point is that it outlines the expected result of the experiment.

It is more than a fancy guess. It's a strategic bet on how a tweak to your site can change user behavior to hit your targets—like boosting sales or leads, not just b.s. metrics like pageviews. Anyone focused on the bottom line knows that what really matters is how these changes affect transactions and leads, not just clicks.

{{audio}}

Resource: Public Resources

{{quote}}

{{workshop}}

Practical Insights from Experts

Don’t just take my word for it.

Andrew Anderson

Senior Conversion Rate Optimization Manager at Limble CMMS, points out that the real world is full of noise, and perfect testing conditions are rare. By focusing on minimizing human error and maintaining good test design, the risks associated with multiple tests can be mitigated.

Lukas Vermee

Director of Experimentation at Vista, highlights that while multivariate testing (MVT) has its benefits, simultaneous A/B tests are not inherently riskier. Balancing speed and accuracy based on the importance of each test is key.

Matt Gershoff

CEO of Conductrics, suggests that unless there's a significant overlap and likelihood of extreme interactions, simultaneous testing is generally fine.

Solutions

At the end of the day, CRO is about delivering real, resonating value to your users while progressively improving KPIs. You can’t do that based on subjective instincts.

What you need is a research-driven approach that generates hypotheses from genuine customer insights. Here are a few of my favorites plus resources

Empathy mapping

Map out deep empathy with user mindsets, environments, and motivations. By stepping into your user’s shoes, new realizations emerge that can inspire fresh ideas.

Resource: How to turn research insights into test ideas blog post

Diary studies

Conduct diary studies to experience organic journeys firsthand. You can do this by having them record details like pain points, motivations, and context to capture rich, in-the-moment qualitative data.

Resource: Moderated vs Unmoderated user testing

Social listening

Listen in on the unsolicited convo happening across social channels.

Resource: Turning qualitative data into quantitative data training video

With a balanced mix of quantified behavior tracks and qualitative "why" insights like these and others, you can craft testing roadmaps centered around how users actually think and operate - not just how your team thinks they should.

Multivariate Testing (MVT)

Welcome to the big leagues, where we don't just test one thing at a time—we test everything all at once. With MVT, you can mix and match elements like a master chef experimenting with ingredients. Just don't forget your fire extinguisher. Expanding on the scenario we used earlier, let's say you want to optimize not only the CTA button but also other elements on your homepage, such as the headline, hero image, testimonials, and description. You create multiple variations for each element: different headlines, images, and descriptions.

Through multivariate testing, you're able to test all possible combinations of these elements simultaneously. By analyzing the performance of each combination, you can identify the most effective combination of elements to maximize conversions. Our friends at VWO put this image together to help show the difference between multivariate testing and A/B testing.

Strategies to Minimize Interaction Effects

If you’re still not convinced, there are some steps you can take to minimize interaction effects between your tests.

Mutual Exclusions

Avoid launching more than one test at a time on the same page or set of pages unless they’re designed with mutual exclusions and you have a high volume of traffic. This helps in isolating the effects of each test.

{{example-1}}

- Test A 50% of visitors see either Headline 1 or Headline 2.

- Test B The other 50% see either Button 1 or Button 2.

By ensuring that no visitor is part of both tests simultaneously, you isolate the effects of each change, reducing the risk of skewed data.

Test-by-Test Consideration

Analyze the potential for interaction effects with each new test. Some scenarios might allow for overlap without significant data corruption if you have a significant amount of traffic, especially if variations are equally distributed among different tests.

{{example-2}}

- Scenario analysis You analyze the potential interaction effects and conclude that the product image and description changes might have minimal overlap in user attention.

- Overlap consideration Since your site has high traffic and variations are equally distributed, you decide that the overlap between these tests is acceptable.

You proceed with running both tests simultaneously, confident that any minor interactions won’t significantly corrupt your data.

Attribution vs Impact

Evaluate what’s more crucial for your strategy—detailed attribution or overall impact. This decision will guide how aggressively you run simultaneous tests.

{{example-3}}

- Detailed attribution If you need precise data on which change (checkout process vs. promotional offer) specifically led to conversions, you might opt to run these tests separately.

- Overall impact However, if your primary goal is to boost conversions quickly and you’re less concerned about pinpointing the exact source of the improvement, you might choose to run both tests simultaneously.

In this case, you decide that the overall impact on conversions is more important than detailed attribution. You run both tests at the same time, focusing on maximizing business growth rather than perfecting attribution.

Multi-Arm Bandit Testing (MAB)

Last but not least, we have the multi-arm bandit testing. With MAB, you can sit back and let the algorithm do the heavy lifting while you sip your coffee and watch the magic happen. The key to MAB testing is that rather than focusing on statistical significance, you’re learning and earning higher conversions at the same time in short-term use cases rather than learning and then earning with traditional testing (the other 3 options mentioned above.)

With MAB, an algorithm dynamically adjusts traffic allocation based on real-time performance data. For example, if the variation with the human and product image plus the “add to cart” CTA consistently outperforms the others, the algorithm will allocate more traffic to that variation while reducing traffic to underperforming variations. Over time, the MAB algorithm learns which combinations yield the best results and optimizes traffic allocation accordingly, leading to improved conversion rates with minimal manual intervention.

Building a Bulletproof Hypothesis

Focus on metrics that matter.

Forget vanity metrics. Your hypothesis should aim to impact key performance indicators (KPIs) that translate directly to your bottom line, such as conversion rates and average order value.

Keep it structured, keep it real.

Stick to the “If… then… because…” format: It’s straightforward and keeps you honest about what you’re changing, what you expect to happen, and why. Example: "If we make the value proposition crystal clear right on the homepage, then bookings will spike because visitors instantly grasp what’s on offer.

Know your audience inside out.

Effective hypotheses are born from a deep understanding of who your users are and what drives their behavior. Use this insight to guide your proposed changes.

What to Avoid at All Costs

Don’t rely on gut feelings.

Base your hypothesis on data, not hunches. Your creativity needs to be backed by analysis and real user data. Relying on gut feelings can lead you astray and waste resources - this is a fast-track to spaghetti testing. We also wrote an entire blog post on why “what if” might be sabotaging your CRO testing and what to do about it.

Avoid predicting exact numbers.

Setting up exact numeric predictions sets you up for failure. CRO is about testing and learning, not crystal ball predictions. Predictions should be directional rather than precise to allow for flexible learning and adaptation.

Skip the fluffy language.

Drop words like "think," "feel," or "believe." They have no place in a hypothesis. Stick to what you can test and prove. Using vague language undermines the clarity and testability of your hypothesis, making it harder to derive meaningful insights.

TL;DR

Interaction effects? Sure, they’re a thing, but don’t let them paralyze you. In the world of CRO, being too cautious can be just as risky as being reckless. You’ve got to strike a balance between getting it right and getting it done.

Remember, this is about business growth, not crafting the perfect academic paper. Run your tests, watch your metrics that actually affect your bottom line, and keep pushing forward. Let the competitors worry about perfection while you're busy boosting your profits.

Heading 2

ghfghfghfghfg

- fjklsdh fjhsdjk fjkhsdjkfhsdjkhfdsjk

- fdhskfj fjlhsdjfh fhjdshfjkhsdjkhfsd

Our Process

Over 6 months, we were evaluating critical aspects like:

CRO program & team maturity

Current CRO processes and testing velocity, training gaps and operational bottlenecks

Strategic planning & prioritization

Experiment prioritization frameworks, hypothesis quality and consistency

At the end, we delivered

- Comprehensive experimentation playbook

- Quarterly CRO roadmap tailored to business priorities fhdjshfjsdkfhjksdhfkjsdfhdsdfhsfkj fjksdhjkfh fhjdkshfs

- A fully designed and implemented program management hub

Royal Caribbean and Celebrity Cruises were operating CRO initiatives but needed a more structured and scalable approach. Chirpy partnered closely with eCommerce, content, and merchandising teams to build internal expertise, align on priorities, and create sustainable processes for running high-velocity tests. Beyond frameworks and playbooks, we instilled a culture of experimentation and provided hands-on guidance to implement best-in-class testing programs — from statistical design through to action-oriented reporting.

Royal Caribbean and Celebrity Cruises were operating CRO initiatives but needed a more structured and scalable approach. Chirpy partnered closely with eCommerce, content, and merchandising teams to build internal expertise, align on priorities, and create sustainable processes for running high-velocity tests. Beyond frameworks and playbooks, we instilled a culture of experimentation and provided hands-on guidance to implement best-in-class testing programs — from statistical design through to action-oriented reporting.

From Insight to Impact

Writing team:

table of contents

table of contents

Speak with a CRO expert today!

Increase conversion