INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

INSIGHTs

Interaction Effects in Tests: Should You Worry?

Let’s Spot the Quick Wins

Thanks! Your CRO Snapshot Is on the Way

"Interaction effects" is a term that gets thrown around a lot in the CRO world. But do they actually matter, or is this just another industry buzzword?

Picture this:

A user comes to your homepage, entering test A.

They navigate to the category page, participating in test B.

Moving on to the product page involves them in test C.

Adding a product to the cart triggers test D.

Completing the checkout process engages them in test E.

Sounds like a typical shopping spree, but here’s the kicker: Are these tests messing with each other’s results? Who gets the glory when the user finally hits 'buy'?

The Debate on Interaction Effects

Many experts argue that interaction effects can significantly skew your data, making it hard to draw accurate conclusions. They worry that multiple tests running simultaneously can create a mess of cross-pollinated results, leading to false positives and increased variance. This is especially concerning for low-traffic sites that need clear, actionable data.

Why We Think Interaction Effects Are Overblown

Real-World Complexity

Websites and user behavior are inherently complex. While interaction effects can occur, they often aren't as disruptive as some fear. In practice, the potential overlap between tests is usually minimal, and any interactions are unlikely to dramatically affect your results.

Practical Insights from Experts

Don’t just take my word for it. Lots of experts agree (check out this awesome article from CXL for more details!)…

- Andrew Anderson, Senior Conversion Rate Optimization Manager at Limble CMMS, points out that the real world is full of noise, and perfect testing conditions are rare. By focusing on minimizing human error and maintaining good test design, the risks associated with multiple tests can be mitigated.

- Lukas Vermeer, Director of Experimentation at Vista, highlights that while multivariate testing (MVT) has its benefits, simultaneous A/B tests are not inherently riskier. Balancing speed and accuracy based on the importance of each test is key.

- Matt Gershoff, CEO of Conductrics, suggests that unless there's a significant overlap and likelihood of extreme interactions, simultaneous testing is generally fine.

Why You Shouldn’t Worry Too Much About Interaction Effects

We believe the benefits of running multiple tests simultaneously often outweigh the potential downsides. Here’s why:

- Increased Testing Velocity: Running multiple tests at once allows you to gather more data and insights quickly, which is crucial for staying competitive and continuously optimizing your site.

- Precision and Accuracy: You can still achieve precise results with multiple tests. Using robust testing tools and methodologies helps control for interactions and ensure reliable outcomes.

- Practical Approach: Unless you expect extreme interactions and significant overlap, simultaneous tests are usually fine. Most of the time, the impact of one test on another is minimal, and the benefits of faster insights far outweigh the risks.

For help mapping out what to test and when, check out our blog on building an A/B testing roadmap.

Strategies to Minimize Interaction Effects

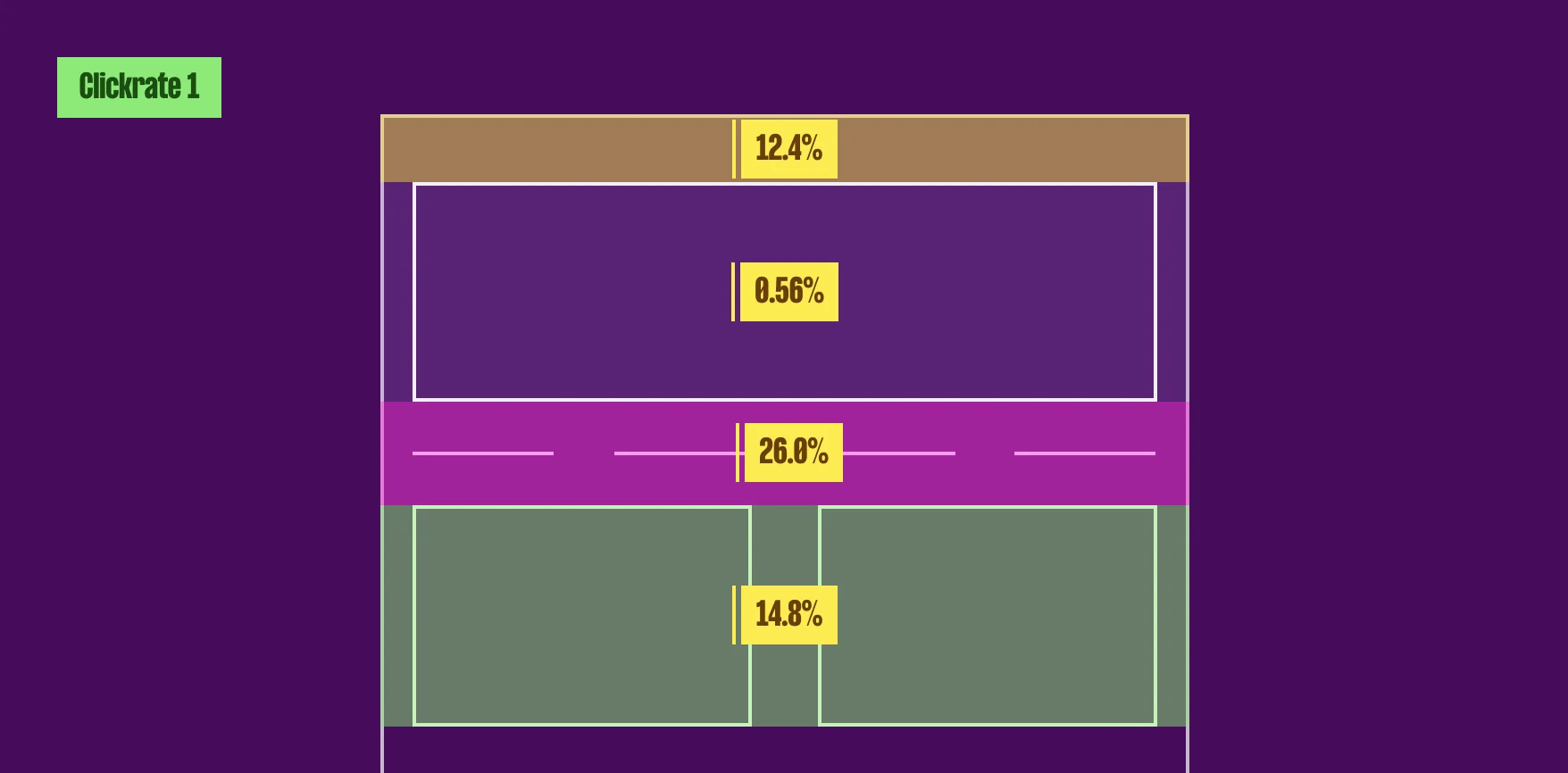

Mutual Exclusions: Avoid launching more than one test at a time on the same page or set of pages unless they’re designed with mutual exclusions and you have a high volume of traffic. This helps in isolating the effects of each test.

Example: Imagine you’re testing a new headline (Test A) and a new call-to-action button (Test B) on your homepage. To avoid interaction effects, you decide to run these tests on separate traffic segments.

Test A: 50% of visitors see either Headline 1 or Headline 2.

Test B: The other 50% see either Button 1 or Button 2.

By ensuring that no visitor is part of both tests simultaneously, you isolate the effects of each change, reducing the risk of skewed data.

Test-by-Test Consideration: Analyze the potential for interaction effects with each new test. Some scenarios might allow for overlap without significant data corruption if you have a significant amount of traffic, especially if variations are equally distributed among different tests.

Example: You’re running a test on the product page to change the product image (Test C) and another test to change the product description (Test D).

Scenario Analysis: You analyze the potential interaction effects and conclude that the product image and description changes might have minimal overlap in user attention.

Overlap Consideration: Since your site has high traffic and variations are equally distributed, you decide that the overlap between these tests is acceptable.

You proceed with running both tests simultaneously, confident that any minor interactions won’t significantly corrupt your data.

Attribution vs. Impact: Evaluate what’s more crucial for your strategy—detailed attribution or overall impact. This decision will guide how aggressively you run simultaneous tests.

Example: Your goal is to increase overall conversions on your site. You’re considering running a test on the checkout page (Test E) to streamline the checkout process and another test on the homepage (Test F) to highlight a new promotional offer.

Detailed Attribution: If you need precise data on which change (checkout process vs. promotional offer) specifically led to conversions, you might opt to run these tests separately.Interaction effects refer to situations where the effect of one variable on a particular outcome depends on the level of another variable. In the context of conversion rate optimization (CRO), this means the impact of one change (like a new call-to-action button) can vary depending on another factor (such as the color scheme of the website). However, if your primary goal is to boost conversions quickly and you’re less concerned about pinpointing the exact source of the improvement, you might choose to run both tests simultaneously.

In this case, you decide that the overall impact on conversions is more important than detailed attribution. You run both tests at the same time, focusing on maximizing business growth rather than perfecting attribution.

TL;DR

Interaction effects? Sure, they’re a thing, but don’t let them paralyze you. In the world of CRO, being too cautious can be just as risky as being reckless. You’ve got to strike a balance between getting it right and getting it done.

Remember, this is about business growth, not crafting the perfect academic paper. Run your tests, watch your metrics that actually affect your bottom line, and keep pushing forward. Let the competitors worry about perfection while you're busy boosting your profits.

Heading 2

ghfghfghfghfg

- fjklsdh fjhsdjk fjkhsdjkfhsdjkhfdsjk

- fdhskfj fjlhsdjfh fhjdshfjkhsdjkhfsd

Our Process

Over 6 months, we were evaluating critical aspects like:

CRO program & team maturity

Current CRO processes and testing velocity, training gaps and operational bottlenecks

Strategic planning & prioritization

Experiment prioritization frameworks, hypothesis quality and consistency

At the end, we delivered

- Comprehensive experimentation playbook

- Quarterly CRO roadmap tailored to business priorities fhdjshfjsdkfhjksdhfkjsdfhdsdfhsfkj fjksdhjkfh fhjdkshfs

- A fully designed and implemented program management hub

Royal Caribbean and Celebrity Cruises were operating CRO initiatives but needed a more structured and scalable approach. Chirpy partnered closely with eCommerce, content, and merchandising teams to build internal expertise, align on priorities, and create sustainable processes for running high-velocity tests. Beyond frameworks and playbooks, we instilled a culture of experimentation and provided hands-on guidance to implement best-in-class testing programs — from statistical design through to action-oriented reporting.

Royal Caribbean and Celebrity Cruises were operating CRO initiatives but needed a more structured and scalable approach. Chirpy partnered closely with eCommerce, content, and merchandising teams to build internal expertise, align on priorities, and create sustainable processes for running high-velocity tests. Beyond frameworks and playbooks, we instilled a culture of experimentation and provided hands-on guidance to implement best-in-class testing programs — from statistical design through to action-oriented reporting.

From Insight to Impact

Writing team:

table of contents

table of contents

Speak with a CRO expert today!

Increase conversion